Put simply, Kubernetes is an orchestration system for deploying and managing containers. Using Kubernetes, you can operate containers reliably across different environments by automating management tasks such as scaling containers across Nodes and restarting them when they stop.

Kubernetes provides abstractions that let you think in terms of application components, such as Pods (containers), Services (network endpoints), and Jobs (one-off tasks). It has a declarative configuration model that simplifies setup and defends against drift—you define what your environment should look like, then the system automatically applies actions to achieve that state.

Why Should Software Developers Learn Kubernetes?

A developer who understands how to use Kubernetes can easily replicate production infrastructure and become more involved with operations. You can use the same tools and processes to deploy your work to any environment, whether locally on your machine or at production scale in the cloud.

Knowledge of Kubernetes also gives you greater ownership of your system. You can make impactful changes that improve the deployment and operating experience, both for yourself and others. When all application environments run using Kubernetes, you know that everyone is working to the same shared deployment model, reducing the scope for errors and discrepancies. If an operator requests a change due to problems in production, you can test and validate it yourself without waiting for their feedback. Just like that, you’ve tightened the development cycle and improved productivity.

Given the surging interest in Kubernetes over the past few years, gaining familiarity can help future-proof your career, too. A 2023 survey from Dynatrace reports a 127 percent increase in adoption during 2022, and 96 percent of organizations surveyed by the Cloud Native Computing Foundation are either using or evaluating the technology. Kubernetes is in-demand, so understanding how it works, the ways it affects your applications, and how to use it, can increase your job prospects.

Key Components of Kubernetes

As we briefly mentioned already, Kubernetes has its own vocabulary of object types that represent different kinds of resources inside your cluster. Some components are part of the cluster itself, such as Nodes, whereas others, like Pods and Services, model parts of your application. You’ll want to familiarize yourself with the terms as you dive into this guide.

Pods

Pods are the compute units within your Kubernetes cluster. Each instance of a Pod holds one or more containers, all of which are scheduled to the same Node in your cluster.

Manual interactions with individual Pods are relatively rare. They’re usually created by higher-level controllers such as ReplicaSets and Deployments, which manage Pod replication and scaling for you.

Nodes

A Node is a physical machine that’s part of your cluster. It runs an agent process called kubelet that maintains communication with the central control plane.

Nodes are responsible for running your containers. When you create a new Pod, the control plane will select the best Node to run it, based on criteria such as resource utilization and any Node selectors you’ve set. The chosen Node pulls the required images and starts your containers. If the Node goes offline, Kubernetes notices its absence and reschedules its Pods to different members of the cluster.

Deployments

Deployments are Kubernetes objects that facilitate declarative updates for Pods. They’re called so because they’re used to deploy your application’s main containers without having to manually create and maintain Pod objects.

Deployments provide several advantages. When you add a Deployment to your cluster, you can define the container image to run and the number of replicas to create. Kubernetes automatically creates the correct number of Pods to match the requested replica count, distributing them across the Nodes in your cluster.

Add more replicas by modifying the Deployment object’s configuration, and Kubernetes will automatically start additional Pods to satisfy the new specification. If you reduce the replica count, Kubernetes will delete older Pods to scale down.

Deployments also allow you to pause the rollouts of your scaling changes, then resume them later on. You can rollback to previous releases, too, ensuring quick mitigation for any problems that new app versions experience.

Clusters

Your Kubernetes cluster is the overall environment that your apps run within. It includes the Nodes within your cluster, as well as the control plane components that manage the entire system.

A cluster’s control plane includes the API server you interact with, the scheduler for allocating Pods to Nodes, and controllers which implement specific cluster behaviors. It also features an etcd datastore which persists the cluster’s state.

Namespaces

Namespaces allow you to organize cluster resources into different logical groups.

Conveniently, object names in your cluster only need to be unique within each namespace.

Namespaces are often used to divide clusters into several environments, such as

developmentandproduction. They allow multiple users and applications to coexist in a single cluster. You can control resource utilization within namespaces by setting up quotas, which prevent individual namespaces from consuming all available capacity.

Basic Commands and Actions

Once you’ve set up your Kubernetes cluster, you can interact with it using kubectl as mentioned earlier. It’s the official command-line client application. Let’s cover a few simple commands you can get started with.

kubectl run

kubectl run starts a new Pod in your cluster, running a specified container image. This starts a Pod called nginx that runs the nginx:latest image:

$ kubectl run nginx --image nginx:latest

pod/nginx created

kubectl get

The get command lists all the objects in your cluster of a particular type, such as pods:

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx 1/1 Running 0 81s

kubectl describe

describe provides detailed information about a specific named object:

$ kubectl describe pod nginx

Name: nginx

Namespace: default

Priority: 0

Service Account: default

Node: minikube/192.168.49.2

Start Time: Sat, 06 May 2023 13:21:07 +0100

Labels: run=nginx

Annotations: <none>

Status: Running

IP: 10.244.0.8

...

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 2m9s default-scheduler Successfully assigned default/nginx to minikube

Normal Pulling 2m8s kubelet Pulling image "nginx:latest"

Normal Pulled 2m kubelet Successfully pulled image "nginx:latest" in 8.202082294s (8.202097725s including waiting)

Normal Created 2m kubelet Created container nginx

Normal Started 2m kubelet Started container nginx

Use this command when you need to troubleshoot problems with an object, such as why a Pod is stuck in the pending state instead of running. The Events list at the bottom of the describe output contains useful information about the actions Kubernetes has taken.

kubectl delete

Remove named objects from your cluster using the delete command:

$ kubectl delete pod nginx

pod "nginx" deleted

Deploying Applications in Kubernetes

You can deploy applications to Kubernetes in several ways. Helm is a popular solution for production use, offering a package manager experience for Kubernetes workloads. However, the kubectl CLI is ideal for getting started.

In the following steps, we’ll demo how to run multiple replicas of an NGINX web server in Kubernetes by creating a Deployment object.

If you don’t have an existing Kubernetes cluster available, try using minikube to quickly start one on your machine. minikube bundles a copy of kubectl.

Creating a Deployment

You can create a new deployment with the following kubectl command:

$ kubectl create deployment nginx --image nginx:latest --replicas 3

deployment.apps/nginx created

This adds a Deployment object named nginx that runs the nginx:latest container image. You can see the object by running the following command:

$ kubectl get deployments

NAME READY UP-TO-DATE AVAILABLE AGE

nginx 3/3 3 3 45s

This output confirms that three replicas have been requested and all three are ready. The Deployment has automatically created Pods to run your containers:

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-654975c8cd-74rjx 1/1 Running 0 113s

nginx-654975c8cd-7mkwk 1/1 Running 0 113s

nginx-654975c8cd-bbphv 1/1 Running 0 113s

There are three Pods in accordance with the replica count you specified. Try deleting one of them:

$ kubectl delete pod nginx-654975c8cd-bbphv

pod "nginx-654975c8cd-bbphv"

Now repeat the command to list the Pods:

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-654975c8cd-74rjx 1/1 Running 0 2m57s

nginx-654975c8cd-7mkwk 1/1 Running 0 2m57s

nginx-654975c8cd-vrkvb 1/1 Running 0 19s

There are still three Pods in the cluster. After you deleted the old one, the Deployment noticed the deviation from the three replicas you requested. It automatically added a new Pod to restore availability.

Scaling a Deployment

You can change the replica count using the kubectl scale command. Identify the Deployment to change and the new number of replicas to apply:

$ kubectl scale deployment nginx --replicas 5

deployment.apps/nginx scaled

The Deployment controller should automatically add two new Pods to your cluster, meeting the updated replica count:

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-654975c8cd-74rjx 1/1 Running 0 5m11s

nginx-654975c8cd-7mkwk 1/1 Running 0 5m11s

nginx-654975c8cd-b7hg6 1/1 Running 0 37s

nginx-654975c8cd-hnklt 1/1 Running 0 37s

nginx-654975c8cd-vrkvb 1/1 Running 0 2m33s

Updating a Deployment

You can change the properties of a Deployment by running kubectl edit. This opens the Deployment’s YAML manifest in your default editor:

$ kubectl edit deployment nginx

Scroll down the file to find the spec.template.spec.containers section. Change the value of the image property from nginx:latest to nginx:stable-alpine.

Save and close the file.

The Deployment replaces your Pods with new ones that use the stable-alpine image tag. Use kubectl to list your cluster’s Pods or Deployments and monitor the rollout:

$ kubectl get deployment

NAME READY UP-TO-DATE AVAILABLE AGE

nginx 4/5 5 4 10m

Rolling Back a Deployment

Sometimes a change you apply might turn out to be incorrect or undesirable. For example, you could have selected the wrong image or specified an incorrect replica count. Kubernetes handles these situations by letting you rollback with a single command:

$ kubectl rollout undo deployment nginx

deployment.apps/nginx rolled backThe Deployment’s configuration reverts back to its previous state.

You can optionally specify a specific revision number to restore:

$ kubectl rollout undo deployment nginx --to-revision=1Managing Deployments Declaratively

The examples above used kubectl commands to define and manage the Deployment. While this is convenient for getting started, you would normally write YAML manifests to create your objects. These can be versioned as part of your source code.

Here’s the YAML corresponding to the Deployment created earlier:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

This demonstrates the label and selector mechanism we previously covered. The Deployment includes a manifest template for its Pods, which each have the app: nginx label assigned. The Deployment has a corresponding label selector configured, so Pods tagged app: nginx to become part of the Deployment.

Save the YAML as deployment.yaml and apply it to your cluster with the following command:

$ kubectl apply -f deployment.yaml

deployment.apps/nginx createdTo modify your Deployment, simply edit your original YAML file. Try changing the spec.replicas field to 5, then repeat the command to apply your change:

$ kubectl apply -f deployment.yaml

deployment.apps/nginx configuredKubernetes automatically transitions your cluster to your newly desired state, adding new Pods to achieve the updated replica count:

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-654975c8cd-8xqd8 1/1 Running 0 91s

nginx-654975c8cd-mjwjp 1/1 Running 0 91s

nginx-654975c8cd-ngnvv 1/1 Running 0 6s

nginx-654975c8cd-qkf2d 1/1 Running 0 6s

nginx-654975c8cd-tb6z6 1/1 Running 0 91sConclusion

Kubernetes orchestrates container deployments across clusters of physical Nodes, efficiently modeling application components using objects such as Pods and Deployments. You can scale container replicas without having to manage failures, dependencies, networking, and storage yourself.

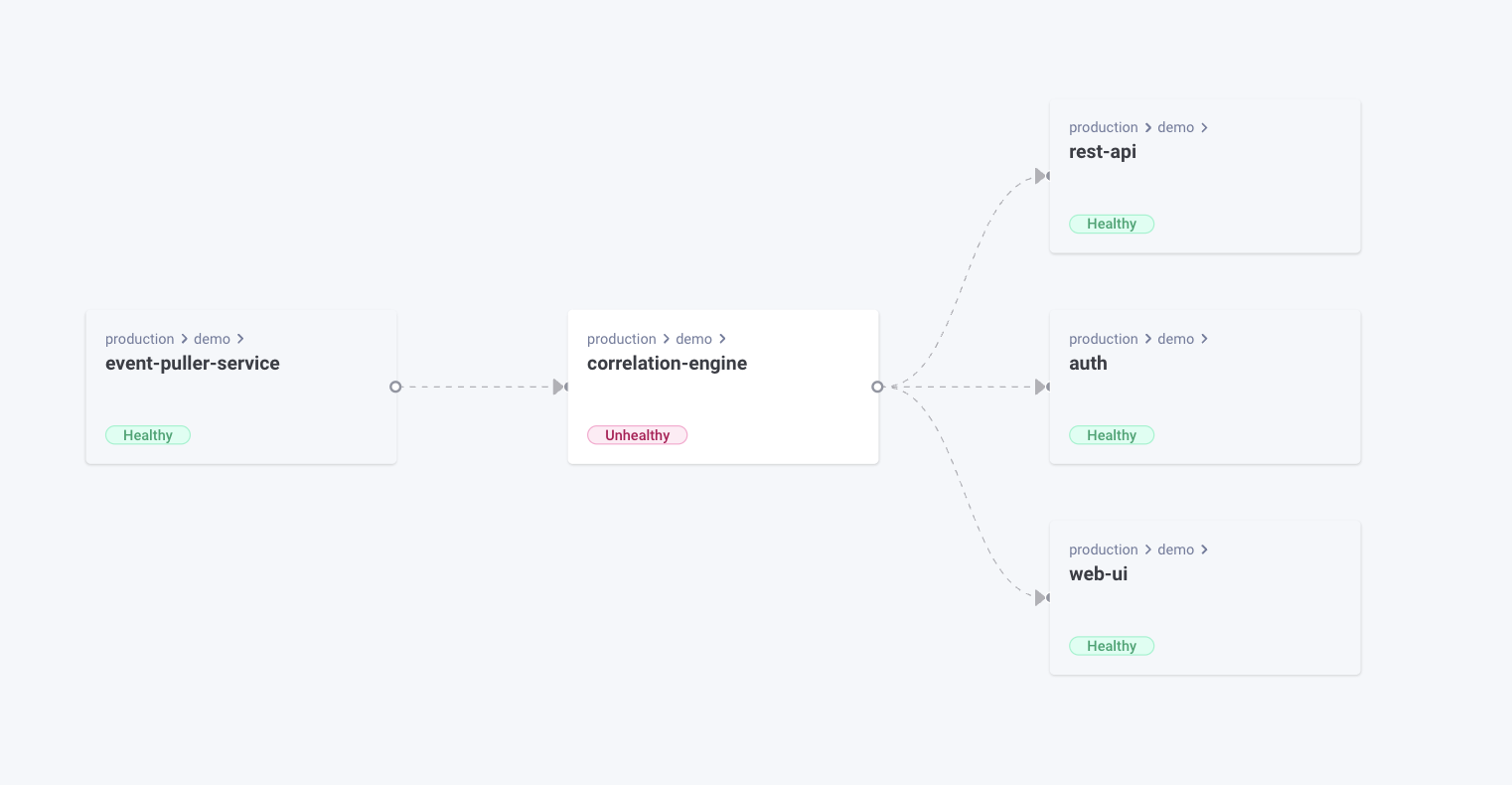

Learning Kubernetes equips you with a skill that’s been in demand for years, within the fast-evolving sphere of cloud-native operations. Understanding Kubernetes also provides you with greater awareness of how code reaches users in production, giving you the same tools that ops teams use to deploy live apps. You can run your own local Kubernetes cluster to accurately replicate production infrastructure on your developer workstation, which can reduce discrepancies between environments.

By now, you’ve explored the basic Kubernetes concepts and seen how to manage a simple container deployment. In Part 2 of this series, we’ll take a closer look at Kubernetes networking and monitoring, so you’re ready to run real-world applications in your cluster. Stay tuned!